In 1921, Lewis Fry Richardson wasn’t satisfied with the current state of weather forecasting. His criticism of the paradigm was fundamental: it relied on pattern-matching instead of modeling the physics of the atmosphere. In the preface of his book Weather Prediction By Numerical Process, which would go on to revolutionize how meteorologists forecast the weather, he described the dominant approach:

The process of forecasting, which has been carried on in London for many years, may be typified by one of its latest developments, namely Col. E. Gold’s Index of Weather Maps. It would be difficult to imagine anything more immediately practical. The observing stations telegraph the elements of present weather. At the head office these particulars are set in their places upon a large-scale map. The index then enables the forecaster to find a number of previous maps which resemble the present one. The forecast is based on the supposition that what the atmosphere did then, it will do again now. There is no troublesome calculation, with its possibilities of theoretical or arithmetical error. The past history of the atmosphere is used, so to speak, as a full-scale working model of its present self.

Richardson, as well as meteorologists Cleveland Abbe and Vilhelm Bjerknes, wanted to infuse the discipline of meteorology with a more scientific approach. Indeed, Abbe wrote in 1901 that the predictions, “merely represent the direct teachings of experience; they are generalizations based upon observations but into which physical theories have as yet entered in only a superficial manner if at all.”

I was unable to find the Index of Weather Maps (if anyone has a digital copy, please get in touch!), but it seems as though Richardson was referring to Ernest Gold’s Aids to Forecasting (1920). The book is so old that I do not have a copy of it either, but a review in Science notes that Gold surveyed the Met Office’s Daily Weather Report for fourteen years of its reports and categorized the weather into similar “indices”. A forecaster could then check the index to find a match for current weather and follow how the weather unfolded in those past examples. The weather prediction would be the closest match to present conditions. However, as the Science reviewer noted, “The weakness of any such classification is, of course, the assumption that like surface isobaric conformations are always followed by similar weather conditions.”

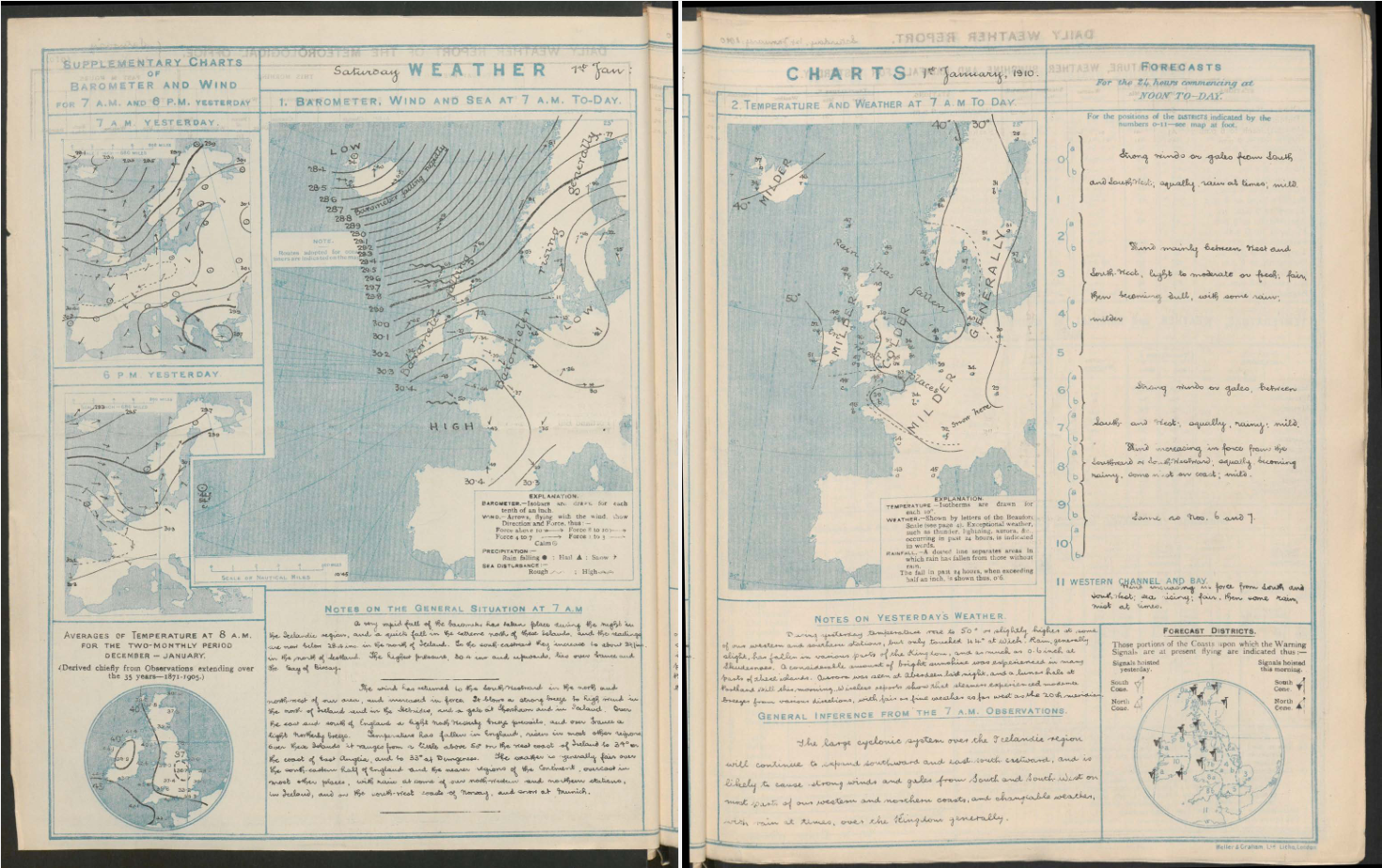

To give you a sense of what these weather reports looked like, below are a few pages for the January 1, 1910 entry. It’s essentially a logbook, where the writer collates all the incoming information from the weather stations, fills out the blank maps to show readings for the wind, pressure (barometer), and temperature, and includes particular notes for the day.

Richardson wasn’t satisfied with searching through an index though. He admired a different publication: The Nautical Almanac (a log of predictions for star positions to help with sea navigation, as well as official dates for holidays). He wrote, “that marvel of accurate forecasting, is not based on the principle that astronomical history repeats itself in the aggregate.” If you take a look at this copy, you will quickly encounter formulas and mathematical expressions. This is in contrast to the weather reports, which were just measurements. While Richardson valued data (this is how he made his predictions, after all), he thought simply comparing to old data was bound to be a dead end. Instead, he wanted to model the weather as a physics problem. And he did, resulting in a framework that helps us forecast the weather much more accurately into the future than we once did.

What’s striking to me about Richardson’s criticism is that it boiled down to this: Pattern matching with data isn’t enough for useful prediction. And while that may seem reasonable, haven’t we just seen a remarkable reversal of that idea in the form of machine learning? Large language models in particular are becoming more and more capable of accomplishing tasks. Can we use machine learning for weather prediction?

There are three reasons to be hopeful about machine learning techniques in weather prediction. First, meteorological stations have rich data sets stretching back decades (and some for over a century). This means extensive training data for models1. In fact, the European Centre for Medium-Range Weather Forecasts (ECMWF) reports that their forecasts take up terabytes of data, and will significantly increase over time (see Figure 11 of the report)2. Second, there’s always a ground truth for weather forecasts. Assuming the observational instruments are working correctly, we can rapidly see if a prediction was right or wrong. This helps when evaluating the performance of a model. Third, running a traditional weather prediction model is expensive. That’s because you must simulate the physics of the atmosphere, which has feedback loops at multiple scales and requires solving many interdependent equations. The exact energy, time, and cost footprints depend on the model, hardware, and kind of simulation (near-term weather versus climate predictions), but climate scientist Tapio Schneider said in a recent article that a climate simulation can consume up to 10 megawatt hours of energy. For context, the article notes that this is about the average energy consumption of a U.S. household in a year. While it’s true that training machine learning models is an upfront cost, there are huge efficiencies in actually outputting a forecast, such as taking one minute on a single TPU to produce a 10-day forecast versus hours on supercomputers with hundreds of machines3.

What caught my attention though was the involvement of the ECMWF in exploring machine learning for their operations. The ECMWF is a research institute but it is also an operational service, providing weather forecasts every day to many countries. It’s one thing to have academics interested in using a new type of tool to solve a problem. It’s quite another for an organization who has a product to consider incorporating a new tool into their workflow.

In 2021, the ECMWF released a ten-year roadmap for how they plan to incorporate machine learning methods into their workflow. They note that, “Machine learning can be used to improve the computational efficiency of weather and climate models, to extract information from data, or to post-process model output, in particular if data-driven machine learning methods can be combined with conventional tools.” Though we have faster computers than ever before, conventional weather models always require more compute, because we want higher resolution, longer forecasts, and more simulations for predictions. Any gain in compute efficiency will translate into increasing these variables.

Many of their efforts and those in the past have occurred in only the past few years, which means there is still a ton to do in this space. The ECMWF’s own initial perspective was that machine learning was an interesting avenue to explore, but “the likelihood of it becoming operational was low, so it was not a wise investment of ECMWF resources.” And yet, they realized that the situation changed in 2022 and 2023, when companies such as NVIDIA, Huawei, and DeepMind released machine learning models that rivaled traditional methods.). These results had enough of an impact on ECMWF that they are now investigating how to incorporate machine learning into their workflow.

They also note that there are many challenges in using machine learning tools for weather forecasting. These include the requirement for custom machine learning solutions for the weather, refactoring code to take advantage of the machine learning libraries in Python or Julia (many conventional weather forecasting code is in Fortran), and barriers in communication between Earth scientists and machine learning researchers. The ECMWF’s plans to address these challenges over the course of their roadmap.

The future of operational weather forecasting probably won’t be just machine learning or just physics-based simulation, but a hybrid of the two. Hopefully, the ECMWF and other organizations can figure out how to augment and complement their workflows such that machine learning becomes an asset, not just a curiosity. I’m excited by the potential reduction in compute time using machine learning, which would allow meteorologists to combine a greater number of simulations to make an ensemble prediction.

Going back to Abbe, Bjerknes, and Richardson, it appears that enough data really can lead to good predictions. What we’ve seen in the past few years with machine learning is that scale matters. To echo what Philip Anderson once said within the context of condensed matter physics, “More is different.”

Endnotes

-

Note that older data may not be as useful, since it wasn’t as comprehensive as our data collection system today. ↩

-

They also say that this volume of data is becoming more and more difficult to handle, which poses a problem as they aim to increase the resolution of their forecasts (requiring more data). ↩

-

A tensor processing unit is a device that Google built specifically for machine learning applications. ↩